I am Yin Chen (陈 银), a Phd. student in Information and Communication Engineering, Hefei University of Technology (HFUT).

I’m now focusing on Computer Vision, Multimedia Affective Computing, Facial Expression Recogniiton. If you are seeking any form of academic cooperation, please feel free to email me at chenyin@mail.hfut.edu.cn.

🔥 News

- 2025.10: 🎉 New paper Static for Dynamic: Towards a Deeper Understanding of Dynamic Facial Expressions Using Static Expression Data is accepted by TAFFC.

- 2025.09: 🎉 S2D has been recognized as a Highly Cited Paper by Web of Science (Top 1%)

- 2024.09: 🎉 New paper DAT: Dialogue-Aware Transformer with Modality-Group Fusion for Human Engagement Estimation is accepted by MM2024.

- 2024.08: 🎉 One paper From Static to Dynamic: Adapting Landmark-Aware Image Models for Facial Expression Recognition in Videos is accepted by TAFFC.

📝 Publications

🎙Affective Computing

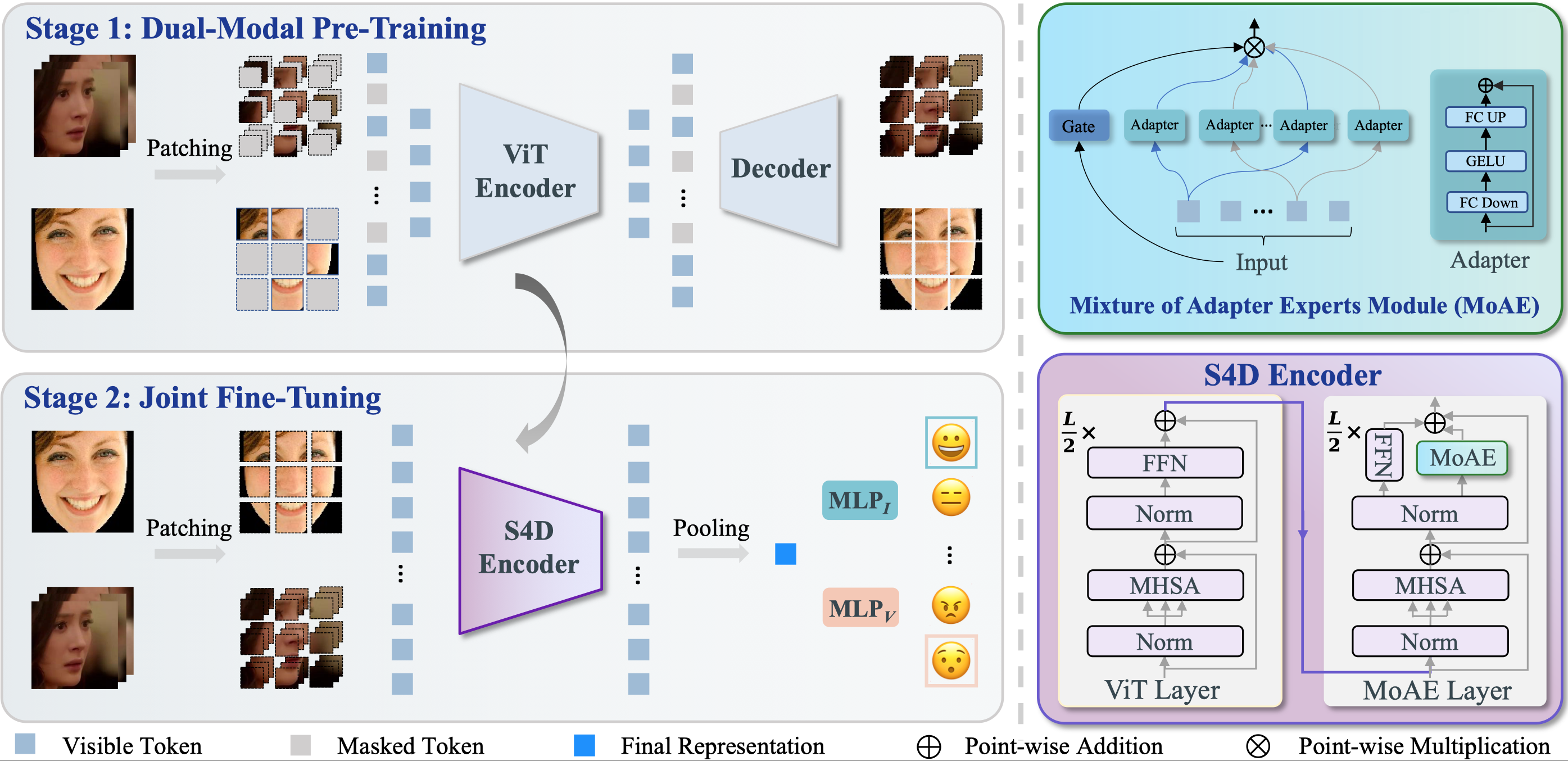

Static for Dynamic: Towards a Deeper Understanding of Dynamic Facial Expressions Using Static Expression Data

Yin Chen†, Jia Li, Yu Zhang, Zhenzhen Hu, Shiguang Shan, Meng Wang, Richang Hong*

- The offical implementation of the paper: “Static for Dynamic: Towards a Deeper Understanding of Dynamic Facial Expressions Using Static Expression Data”.

Generalizable Engagement Estimation in Conversation via Domain Prompting and Parallel Attention

Yangche Yu, Yin Chen, Jia Li, Peng Jia, Yu Zhang, Li Dai, Zhenzhen Hu, Meng Wang, Richang Hong

- The Winner’s Solution of MM 2025 Human Engagement Estimation Challenge.

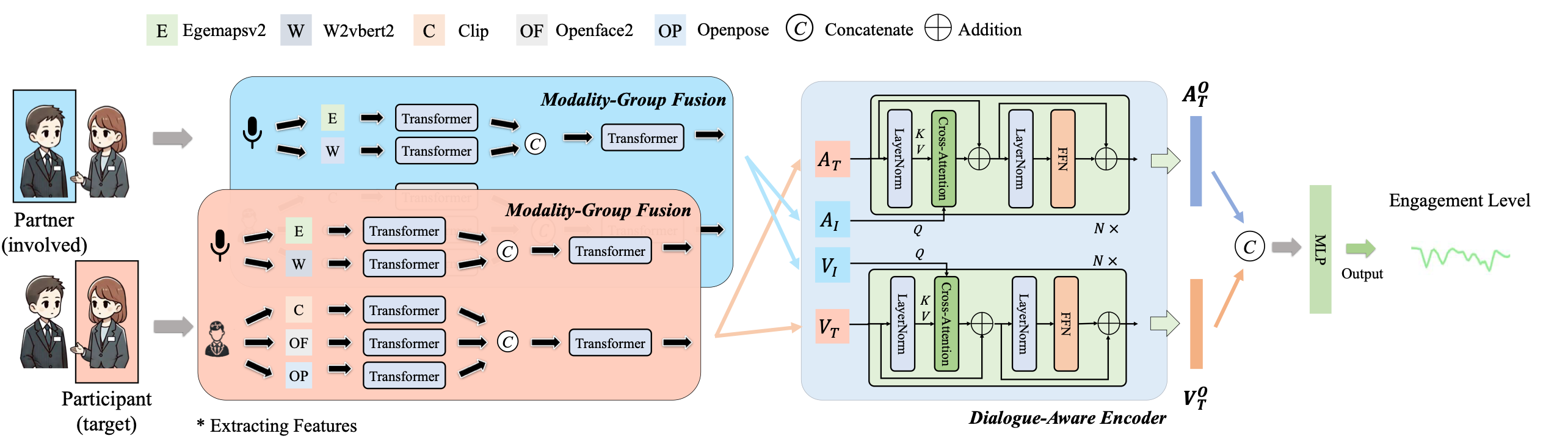

DAT: Dialogue-Aware Transformer with Modality-Group Fusion for Human Engagement Estimation

Jia Li, Yangchen Yu, Yin Chen, Yu Zhang, Peng Jia, Yunbo Xu, Ziqiang Li, Meng Wang, Richang Hong

- The Winner’s Solution of MM 2024 Human Engagement Estimation Challenge.

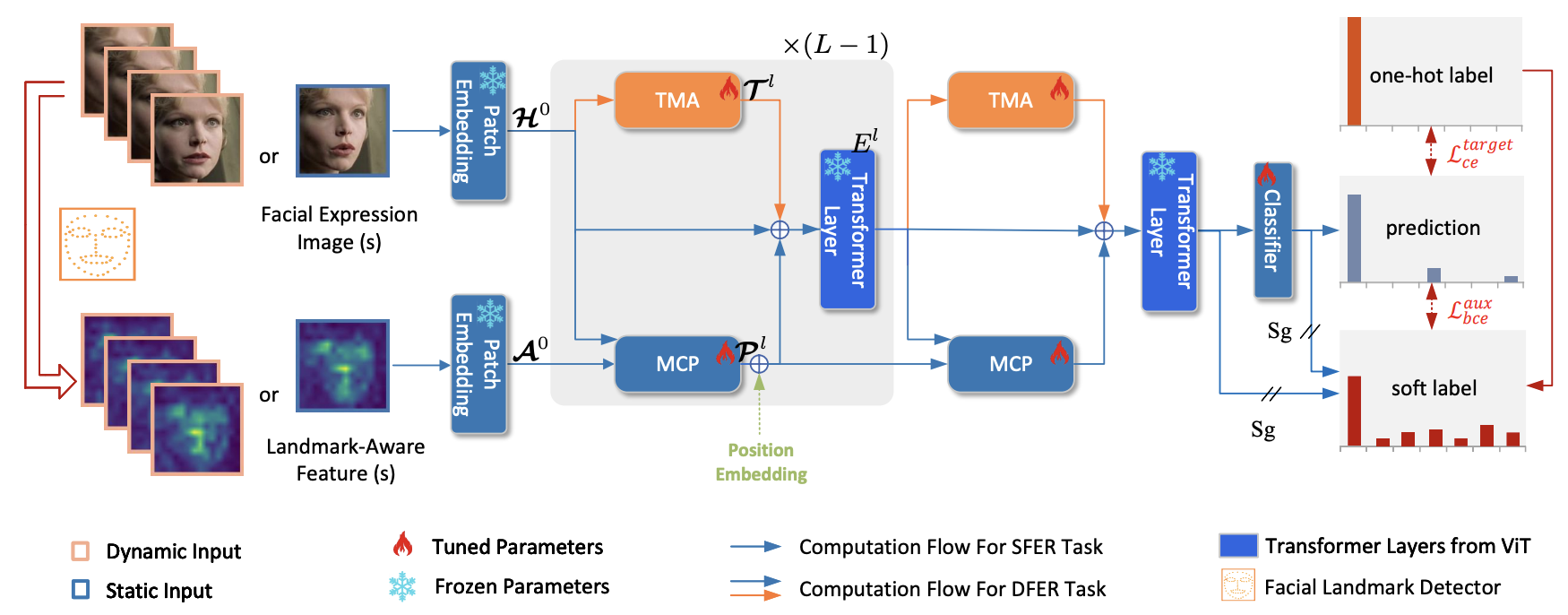

From Static to Dynamic: Adapting Landmark-Aware Image Models for Facial Expression Recognition in Videos

Yin Chen†, Jia Li†, Shiguang Shan, Meng Wang, Richang Hong

- The offical implementation of the paper: From Static to Dynamic: Adapting Landmark-Aware Image Models for Facial Expression Recognition in Videos.

- ESI Highly Cited Paper (Top 1% in the field, updated to June 2025)

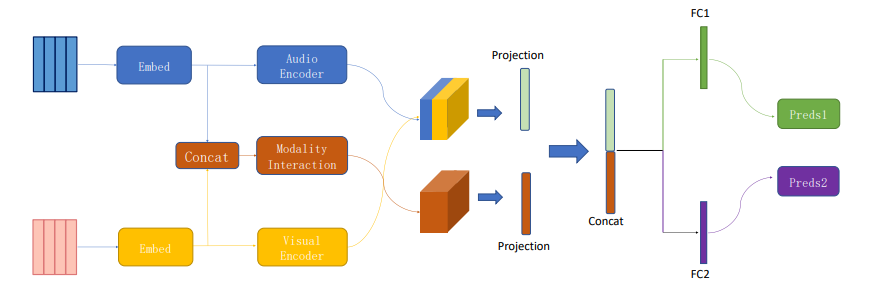

Multimodal feature extraction and fusion for emotional reaction intensity estimation and expression classification in videos with transformers

Jia Li*, Yin Chen*, Xuesong Zhang, Jiantao Nie, Ziqiang Li, Yangchen Yu, Yan Zhang, Richang Hong† ,Meng Wang

- The Winner’s Solution of CVPR2023-ABAW5 Emotional Reaction Intensity (ERI) Estimation Challenge.

🎖 Honors and Awards

- 2023 CVPR Workshop ABAW5, 情感反应强度估计挑战赛冠军

- 2024 MM Workshop, 参与度估计挑战赛季军

- 2025 MM Workshop, 参与度估计挑战赛冠军

- 微软明日之星

- 合肥工业大学研究生一等奖学金x3

- 合肥工业大学三好学生

- 合肥工业大学斛兵学子奖学金x3

- 合肥工业大学优秀毕业生

- 合肥工业大学学业奖学金x2

📖 Educations

- 2022.09 - now, Phd., Hefei University of Technology, Hefei, China.

- 2018.09 - 2022.06, Hefei University of Technology, Hefei, China.

- 2015.09 - 2018.06, Shangyu Chunhui Middle School, ShaoXing.

💬 Invited Talks

💻 Internships

- 2021.12 - 2022.09, Microsoft Research Asia, Beijing.